DAY 3 - Industry Connect

a. Difference between system, integration and E2E tests

System Testing

This is the level of testing that validates the complete and fully integrated software product. The purpose of a system test is to evaluate compliance with its specified requirements and specifications. Usually, the software is only one element of a larger computer-based system. Ultimately, the software is interfaced with other software/hardware systems. System Testing is actually a series of different tests whose sole purpose is to exercise the full computer-based system. System testing seeks to detect defects both within the “inter-assemblages” and also within the system as a whole. The actual result is the behaviour produced or observed when a component or system is tested.

system testing is carried out to achieve the following:

- Testing the fully integrated applications including external peripherals in order to check how components interact with one another and with the system as a whole.

- Verify thorough testing of every input in the application to check for desired outputs.

- Testing of the user’s experience with the application.

Below are some types of system testing performed in the software industry

- Usability testing — mainly focuses on the user's ease to use the application, flexibility in handling controls and ability of the system to meet its objectives.

- Load Testing -is necessary to know that a software solution will perform under real-life loads.

- Regression Testing – involves testing done to make sure none of the changes made over the course of the development process has caused new bugs. It also makes sure no old bugs appear from the addition of new software modules over time.

- Recovery testing — is done to demonstrate a software solution is reliable, trustworthy and can successfully recoup from possible crashes.

- Migration testing- is done to ensure that the software can be moved from older system infrastructures to current system infrastructures without any issues.

- Functional Testing — Also known as functional completeness testing, it involves trying to detect any possible missing functions. Testers might make a list of additional functionalities that a product could have to improve during functional testing.

- Hardware/Software Testing — This is when the tester focuses his/her attention on the interactions between the hardware and software during system testing.

Integration Testing

Integration testing is defined as a type of testing where software modules or systems are integrated logically and tested as a group. A typical software project consists of multiple software modules, coded by different programmers. The purpose of this level of testing is to expose defects in the interaction between these software modules when they are integrated. Integration Testing focuses on checking data communication amongst these modules against the requirement of the system specification.

End To End Testing(E2E)

End to end testing (E2E testing) refers to a software testing method that involves testing an application's workflow from beginning to end. This method basically aims to replicate real user scenarios so that the system can be validated for integration and data integrity. Essentially, the test goes through every operation the application can perform to test how the application communicates with hardware, network connectivity, external dependencies, databases, and other applications. Usually, E2E testing is executed after functional and system testing is complete. Software systems nowadays are complex and interconnected with numerous subsystems. If any of the subsystems fails, the whole software system could crash. This is a major risk and can be avoided by end-to-end testing.

b. Difference between regression, sanity and smoke tests

Smoke Testing

Smoke testing is an approach which is usually carried out during the initial development stages of the Software Development Life Cycle(SDLC) to make sure that the core functionalities of a program are working fine without any issues. It is executed before any detailed functional tests are done on the software.

The main intent of smoke testing is not to perform deep testing but to verify that the core or main functionalities of the program or the software are working fine. Smoke testing aims to reject a badly broken build in the initial stage so that the testing team does not waste time in installing & testing the software application.

Smoke testing is also called as Build Verification Test.

Let’s see a simple example where you are given an email application to test. The important functions would be logging in to the email application, composing an email and sending it, right? And, in case the email is not sent, does it make any sense to test other functionalities like drafts, deleted messages, archives, etc? This means you will have to drop the build without further validation. This is called Smoke testing.

The main focus of smoke testing is to test the critical areas and not the complete application.

When to perform Smoke Testing

- When developers provide a fresh build to the QA team. A fresh build here means when the build has new changes made by the developers.

- When a new module is added to the existing functionality.

Automation & Smoke Testing:

Usually this is the type of testing that is executed before actual automation test cases can run. For organizations that have continuous testing built in, smoke testing is equivalent to successful installation of the build for running test cases or execution of the first test case. So, this is not a type of testing that is deliberately automated but if test automation is put into place, the test automation can only run successfully once the software has passed smoke testing. Or otherwise, the first test case that executes might fail.

Sanity Testing

Sanity testing is a kind of testing performed to check whether a software product is working correctly when a new module or functionality gets implemented to an existing product. Sanity testing is a software testing technique which does a quick evaluation of the quality of the software release to determine whether it is eligible for further rounds of testing or not.

Sanity testing is usually performed after receiving a fairly stable software build or sometimes when a software build might have undergone minor changes in the code or functionality. It decides if end to end testing of a software product shall be carried out further or not.

Sanity testing is also a Surface Level Testing which helps in deciding if the software build is good enough to pass it to the next level of testing.

Why perform Sanity Testing

- To verify and validate the conformity of newly added functionalities and features in existing code.

- To ensure that the introduced changes do not affect other existing functionalities of the product.

- To decide further testing can be carried forward or not.

When to perform Sanity Testing

- Build is received after many regressions or if there is a minor change in the code.

- The build is received after bug fixing.

- Just before the deployment on production.

Automation & Sanity Testing:

Considering, Sanity Testing is considered as a subset of regression testing, these are the test cases that can be automated. A recommended approach is to execute these test cases before running the complete regression test suite. The benefit is that if there are any errors in the sanity test cases, then errors can be reported sooner rather than later.

There are companies that have benefited from automating their sanity test cases. Here is a case study where Freshworks – a feature packed product suite for businesses of all sizes – was able to automate 100% of their sanity test cases in 90 days using Testsigma: Testsigma+Freshworks – A case study

Regression Testing

Regression testing is the verification of “bug fixes or any changes in the requirement” and making sure they are not affecting other functionalities of the application. Regression testing is effective on automation and usually performed after some modifications have been made in the software build after requirement changes or bug fixes.

Once Sanity testing of the changed functionality is completed, all the impacted features of the application require complete testing. This is called regression testing.

Whenever bug fixes are done in the existing software, some test scenarios need to be executed, to verify the bug fixes. In addition to these, the QA team also has to check the impacted areas, based on the code changes. In regression testing, all those test scenarios will have to be executed, to take care of related functionalities.

When to perform Regression Testing

- After Code modification according to the required changes

- After some new features are added to the application

- After some bug fixes are incorporated into the build

Automation & Regression Testing:

Regression test cases are actually the ideal test cases for automaton. Usually, when an organization starts automation, these are the test cases that are automated first. If regression testing is an activity that is taking a lot of time for your testers and the same test cases are repeated multiple times then it is time that you start thinking of automation too.

If you are looking for an automated regression testing tool that can help you get started on your automation journey, then you also need to ensure that you choose the right tool. A tool that can also provide you ROI on the efforts invested. We have the guide that can help you there:

https://testsigma.com/blog/10-points-to-help-you-choose-the-right-test-automation-tool/

Differences Between Smoke vs Sanity vs Regression Testing

| Smoke Testing | Sanity Testing | Regression Testing |

| Performed on initial builds | Performed on stable builds | Performed on stable builds |

| To test the stability of new build | To test the stability of new functionality or code changes in the existing build | To test the functionality of all affected areas after new functionality/code changes in the existing build |

| Covers end to end basic functionalities | Covers certain modules, in which code changes have been made | Covers detailed testing targeting all the affected areas after new functionalities are added |

| Executed by testers & sometimes also by developers | Executed by testers | Executed by testers, mostly via automation |

| A part of basic testing | A part of regression testing | Regression Testing is a super set of Smoke and Sanity Testing |

| Done usually every time there is a new build | Planned when there is not enough time for in-depth testing | Usually performed, when testers have enough time |

Key Points

- Smoke and Sanity testing help the QA team save time by quickly testing to make sure if an application is working properly or not. Also, it ensures that the product is eligible for further testing. Whereas Regression testing helps enhance the confidence about the software quality after a particular change. Especially, that the code changes are not affecting related areas.

- Smoke Testing is done by both the dev team or by the QA team and can be taken as a subset of rigorous testing. Whereas both Sanity & Regression testing are done only by the QA team. Also, Sanity testing can be considered as a subset of acceptance testing.

- Smoke testing is executed at the initial stage of SDLC, to check the core functionalities of an application. Whereas Sanity & Regression testing are done at the final stage of SDLC, to check the main functionalities of an application.

- As per the requirement of testing & time availability, the QA team may have to execute Sanity, Smoke & Regression tests on their software build. In such cases, Smoke tests are executed first, followed by Sanity Testing & then based on time availability regression testing is planned.

In practice, all QA teams need to do Smoke, Sanity and Regression testing. All of these testing types have a pre-defined number of test cases that get executed multiple times. This repetitive execution also makes them an ideal candidate for test automation. When looking for automation, you are recommended to use a tool that provides you ROI on automation from the initial stages. Testsigma is one such tool.

c. Difference between Alpha and Beta tests

Alpha Testing is performed by the Testers within the organization whereas Beta Testing is performed by the end users.

Alpha Testing is performed at Developer's site whereas Beta Testing is performed at Client's location.

d. Difference between black box and white box tests

Black Box Testing is a software testing method in which the internal structure/ design/ implementation of the item being tested is not known to the tester.

In Black-box testing, a tester doesn’t have any information about the internal working of the software system. Black box testing is a high level of testing that focuses on the behavior of the software. It involves testing from an external or end-user perspective. Black box testing can be applied to virtually every level of software testing: unit, integration, system, and acceptance.

White Box Testing is a software testing method in which the internal structure/ design/ implementation of the item being tested is known to the tester.

White-box testing is a testing technique which checks the internal functioning of the system. In this method, testing is based on coverage of code statements, branches, paths or conditions. White-Box testing is considered as low-level testing. It is also called glass box, transparent box, clear box or code base testing. The white-box Testing method assumes that the path of the logic in a unit or program is known.

KEY DIFFERENCE

- In Black Box, testing is done without the knowledge of the internal structure of program or application whereas in White Box, testing is done with knowledge of the internal structure of program.

- When we compare Blackbox and Whitebox testing, Black Box test doesn’t require programming knowledge whereas the White Box test requires programming knowledge.

- Black Box testing has the main goal to test the behavior of the software whereas White Box testing has the main goal to test the internal operation of the system.

- Comparing White box testing and Black box testing, Black Box testing is focused on external or end-user perspective whereas White Box testing is focused on code structure, conditions, paths and branches.

- Black Box test provides low granularity reports whereas the White Box test provides high granularity reports.

- Comparing Black box testing vs White box testing, Black Box testing is a not time-consuming process whereas White Box testing is a time-consuming process.

e. Explain API testing with an example

API testing is a type of software testing that analyzes an application program interface (API) to verify it fulfills its expected functionality, security, performance and reliability. The tests are performed either directly on the API or as part of integration testing. An API is middleware code that enables two software programs to communicate with each other. The code also specifies the way an application requests services from the operating system (OS) or other applications.

Applications frequently have three layers: a data layer, a service layer -- the API layer -- and a presentation layer -- the user interface (UI) layer. The business logic of the application -- the guide to how users can interact with the services, functions and data held within the app -- is in the API layer.

API testing focuses on analyzing the business logic as well as the security of the application and data responses. An API test is generally performed by making requests to one or more API endpoints and comparing the response with expected results.

API testing is frequently automated and used by DevOps, quality assurance (QA) and development teams for continuous testing practices.

https://youtu.be/YxhxYooPeZI

How to approach API testing

An API testing process should begin with a clearly defined scope of the program as well as a full understanding of how the API is supposed to work. Some questions that testers should consider include:

- What endpoints are available for testing?

- What response codes are expected for successful requests?

- What response codes are expected for unsuccessful requests?

- Which error message is expected to appear in the body of an unsuccessful request?

Once factors such as these are understood, testers can begin applying various testing techniques. Test cases should also be written for the API. These test cases define the conditions or variables under which testers can determine whether a specific system performs correctly and responds appropriately. Once the test cases have been specified, testers can perform them and compare the expected results to the actual results. The test should analyze responses that include:

- reply time,

- data quality,

- confirmation of authorization,

- HTTP status code and

- error codes.

API testing can analyze multiple endpoints, such as web services, databases or web user interfaces. Testers should watch for failures or unexpected inputs. Response time should be within an acceptable agreed-upon limit, and the API should be secured against potential attacks.

Tests should also be constructed to ensure users can't affect the application in unexpected ways, that the API can handle the expected user load and that the API can work across multiple browsers and devices.

The test should also analyze the results of nonfunctional tests as well, including performance and security.

Types of API tests

Various types of API tests can be performed to ensure the application programming interface is working appropriately. They range from general to specific analyses of the software. Here are examples of some of these tests.

Validation testing includes a few simple questions that address the whole project. The first set of questions concerns the product: Was the correct product built? Is the designed API the correct product for the issue it attempts to resolve? Was there any major code bloat -- production of code that is unnecessarily long, slow and wasteful -- throughout development that would push the API in an unsustainable direction?

The second set of questions focuses on the API's behavior: Is the correct data being accessed in the predefined manner? Is too much data being accessed? Is the API storing the data correctly given the data set's specific integrity and confidentiality requirements?

The third set of questions looks at the efficiency of the API: Is this API the most efficient and accurate method of performing a task? Can any codebase be altered or entirely removed to reduce impairments and improve overall service?

Functional testing ensures the API performs exactly as it is supposed to. This test analyzes specific functions within the codebase to guarantee that the API functions within its expected parameters and can handle errors when the results are outside the designated parameters.

Load testing is used to see how many calls an API can handle. This test is often performed after a specific unit, or the entire codebase, has been completed to determine whether the theoretical solution can also work as a practical solution when acting under a given load.

Reliability testing ensures the API can produce consistent results and the connection between platforms is constant.

Security testing is often grouped with penetration testing and fuzz testing in the greater security auditing process. Security testing incorporates aspects of both penetration and fuzz testing, but also attempts to validate the encryption methods the API uses as well as the access control design. Security testing includes the validation of authorization checks for resource access and user rights management.

Penetration testing builds upon security testing. In this test, the API is attacked by a person with limited knowledge of the API. This enables testers to analyze the attack vector from an outside perspective. The attacks used in penetration testing can be limited to specific elements of the API or they can target the API in its entirety.

Fuzz testing forcibly inputs huge amounts of random data -- also called noise or fuzz -- into the system, attempting to create negative behavior, such as a forced crash or overflow.

API testing tools

When performing an API test, developers can either write their own framework or choose from a variety of ready-to-use API testing tools. Designing an API test framework enbles developers to customize the test; they are not limited to the capabilities of a specific tool and its plugins. Testers can add whichever library they consider appropriate for their chosen coding platform, build unique and convenient reporting standards and incorporate complicated logic into the tests. However, testers need sophisticated coding skills if they choose to design their own framework.

Conversely, API testing tools provide user-friendly interfaces with minimal coding requirements that enable less-experienced developers to feasibly deploy the tests. Unfortunately, the tools are often designed to analyze general API issues and problems more specific to the tester's API can go unnoticed.

A large variety of API testing tools is available, ranging from paid subscription tools to open source offerings. Some specific examples of API testing tools include:

- SoapUI. The tool focuses on testing API functionality in SOAP and REST APIs and web services.

- Apache JMeter. An open source tool for load and functional API testing.

- Apigee. A cloud API testing tool from Google that focuses on API performance testing.

- REST Assured. An open source, Java-specific language that facilitates and eases the testing of REST APIs.

- Swagger UI. An open source tool that creates a webpage that documents APIs used.

- Postman. A Google chrome app used for verifying and automating API testing.

- Katalon. An open source application that helps with UI automated testing.

Examples of API tests

While the use cases of API testing are endless, here are two examples of tests that can be performed to guarantee that the API is producing the appropriate results.

When a user opens a social media app -- such as Twitter or Instagram -- they are asked to log in. This can be done independently -- through the app itself -- or through Google or Facebook. This implies the social media app has an existing agreement with Google and Facebook to access some level of user information already supplied to these two sources. An API test must then be conducted to ensure that the social media app can collaborate with Google and Facebook to pull the necessary information that will grant the user access to the app using login information from the other sources.

Another example is travel booking systems, such as Expedia or Kayak. Users expect all the cheapest flight options for specific dates to be available and displayed to them upon request when using a travel booking system. This requires the app to communicate with all the airlines to find the best flight options. This is done through APIs. As a result, API tests must be performed to ensure the travel booking system is successfully communicating with the other companies and presenting the correct results to users in an appropriate timeframe. Furthermore, if the user then chooses to book a flight and pays using a third-party payment service, such as PayPal, then API tests must be performed to guarantee the payment service and travel booking systems can effectively communicate, process the payment and keep the user's sensitive data safe throughout the process.

Best practices for API testing

API testing best practices include:

- When defining test cases, group them by category.

- Include the selected parameters in the test case itself.

- Develop test cases for every potential API input combination to ensure complete test coverage.

- Reuse and repeat test cases to monitor the API throughout production.

- Use both manual and automated tests to produce better, more trustworthy results.

- When testing the API, note what happens consistently and what does not.

- API load tests should be used to test the stress on the system.

- APIs should be tested for failures. Tests should be repeated until it produces a failed output. The API should be tested so that it fails consistently to identify the problems.

- Call sequencing should be performed with a solid plan.

- Testing can be made easier by prioritizing the API function calls.

- Use a good level of documentation that is easy to understand and automate the documentation creation process.

- Keep each test cases self-contained and separate from dependencies, if possible.

f. What are the different types of non-functional testing?

Following are the most common Types of Non Functional Testing :

- Performance Testing

- Load Testing

- Failover Testing

- Compatibility Testing

- Usability Testing

- Stress Testing

- Maintainability Testing

- Scalability Testing

- Volume Testing

- Security Testing

- Disaster Recovery Testing

- Compliance Testing

- Portability Testing

- Efficiency Testing

- Reliability Testing

- Baseline Testing

- Endurance Testing

- Documentation Testing

- Recovery Testing

- Internationalization Testing

- Localization Testing

Non-functional testing Parameters

1) Security: The parameter defines how a system is safeguarded against deliberate and sudden attacks from internal and external sources. This is tested via Security Testing.

2) Reliability: The extent to which any software system continuously performs the specified functions without failure. This is tested by Reliability Testing

3) Survivability: The parameter checks that the software system continues to function and recovers itself in case of system failure. This is checked by Recovery Testing

4) Availability: The parameter determines the degree to which user can depend on the system during its operation. This is checked by Stability Testing.

5) Usability: The ease with which the user can learn, operate, prepare inputs and outputs through interaction with a system. This is checked by Usability Testing

6) Scalability: The term refers to the degree in which any software application can expand its processing capacity to meet an increase in demand. This is tested by Scalability Testing

7) Interoperability: This non-functional parameter checks a software system interfaces with other software systems. This is checked by Interoperability Testing

8) Efficiency: The extent to which any software system can handles capacity, quantity and response time.

9) Flexibility: The term refers to the ease with which the application can work in different hardware and software configurations. Like minimum RAM, CPU requirements.

10) Portability: The flexibility of software to transfer from its current hardware or software environment. 11) Reusability: It refers to a portion of the software system that can be converted for use in another application.

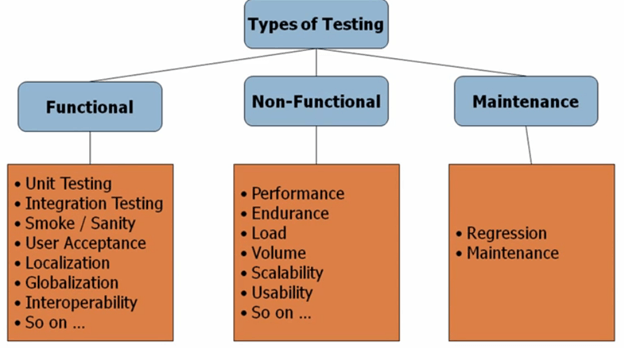

Type of Software Testing

In general, there are three testing types

- Functional

- Non – Functional

- Maintenance

g. What is User Acceptance Testing and who does UAT?

User acceptance testing (UAT) is the last phase of the software testing process. During UAT, actual software users test the software to make sure it can handle neccessary tasks in real-world scenarios, according to specifications.

h. What is compatibility testing and how they are performed?

Compatibility testing is sub-category of software testing performed to determine whether the software under test can function on different hardware, operating systems, applications, network environments and mobile devices. It is a non-functional testing and is performed only after the software becomes stable.

评论

发表评论